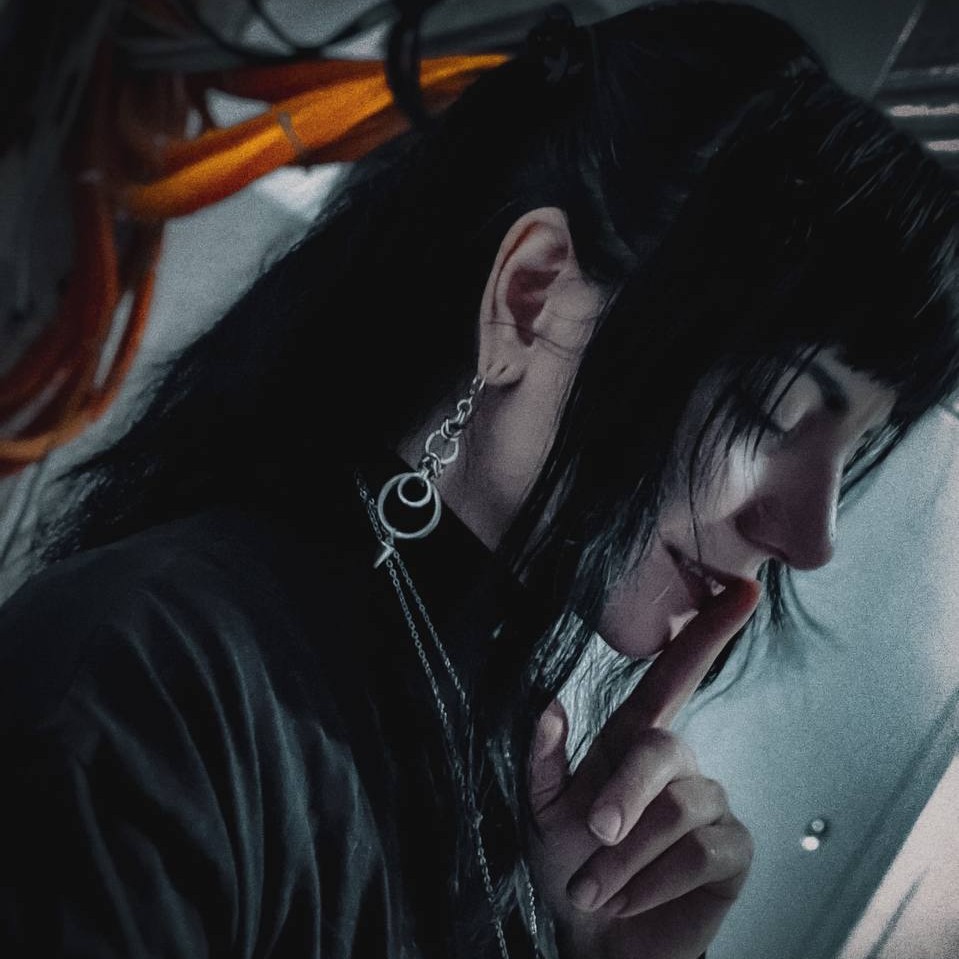

Digital art, procreate + google deepdream

Art-talk

This piece was created using digital art tools (Procreate) in collaboration with Googles DeepDream .

Before the widespread proliferation of todays meticulausly correct GenAI, there was a time of tripping models Google’s DeepDream was a particularly popular early example of this process.

By that time, I had already begun a practice of feeding my own art, specifically its intermediate stages, into whatever AI models I could lay my hands on. I would then selectively integrate some of the resulting forms back into the original work. This piece became one of my first explorations down the path of seeking symbiosis - and honestly, just playful creativity.

Nerd-talk

DeepDream is based on a Convolutional Neural Network (CNN), the same type of model used for image recognition. The key difference lies in how the network is used:

Image Recognition: A CNN is trained to look at an image and identify what it sees (e.g., “That’s a cat”).

DeepDream (Image Generation): Instead of asking the network what it sees, we tell it to look at an existing image and enhance what it “thinks” it sees.

- Run the artpiece trough the CNN

- Select a specific layer within the network (each layer learns to recognize increasingly complex features, from basic edges to full objects)

- Crank up the activation of the neurons in this very selected layer

The model then adjusts the input image to make the features it already recognizes in that layer more prominent—essentially drawing the model’s abstract internal representation of that feature directly onto the input image. Which, in DeepDreams case, were wildly psychedelic, colorful, trippy abnormities - the internal “vision” of the network itself :D